When managing AWS infrastructure, we often need to adjust our CloudFormation (CF) stacks while preserving critical resources like DynamoDB tables or Container Registry. In this blog, we’ll explore how we successfully imported three existing DynamoDB tables into a new CF stack after deleting their original stacks, while ensuring no data loss or service interruption.

Context

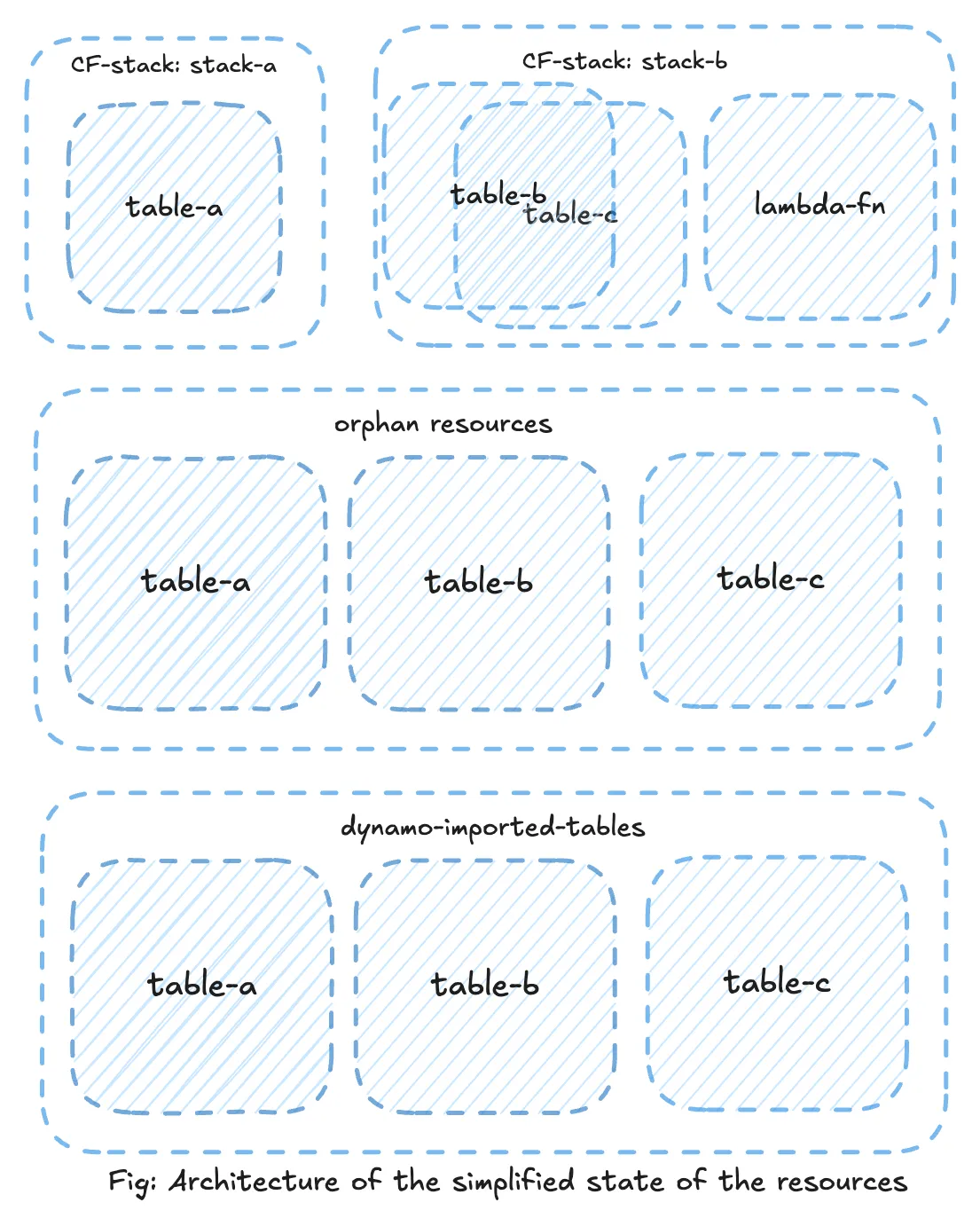

We had two CF stack consisting of following resources. We’ve used example names for the simplicity and purpose of this writing.

- stack-a:

- dynamodb

table-a

- dynamodb

- stack-b:

- dynamodb

table-bandtable-c - lambda function

ln-1 - IAM role

- dynamodb

Our resources were IaC managed i.e. written using CDK. Our goal was to delete the existing CloudFormation stacks while keeping only the dynamoDB tables intact and eventually import them into a new stack. Important to note that we have manually turned on Point-In-Time Recovery (PITR) and deletion protection to prevent any accidental delete and recovery. Initially when above two stacks were written removalPolicy for dynamodb was set to destroy meaning when the CF stack will be deleted this assoiciated resource will be deleted as well (removalPolicy: RemovalPolicy.DESTROY). Perhaps we didn’t pay much attention to this particular point which bit us badly few moments later 😄!

Migration Process

Step 1: Delete stack-a

Cloudformation -> Stacks -> stack-a -> Delete

But deletion didn’t go as planned due conflict of configuration:

- DeletionPolicy and UpdateReplacePolicy were both set to

DELETE. This meant that CloudFormation was trying to delete thetable-aduring stack deletion as we mentioned earlier. - Deletion protection was enabled on the DynamoDB table, which prevented CloudFormation from actually deleting it which actually saved us as deletion of the table would delete production data!

Anyway, this conflict resulted in the stack entering a DELETE_FAILED state. This means we can’t actually update the stack via CDK anymore. Because when the deletion failed we tried updating removalPolicy: RemovalPolicy.RETAIN using CDK but it failed. We tried couple of other ways but none of them actually worked.

Rescue:

- So we proceeded with Retry delete with retain resource option from the console. This allowed us to successfully delete the stack without affecting the table, effectively leaving it as an orphaned resource.

Step 2: Delete stacks-b

Lesson learned from the stack-a, so this time around we updated the CF template using CDK (removalPolicy: RemovalPolicy.RETAIN) before attempting Cloudformation -> Stacks -> stack-a -> Delete:

Above updated policies ensured table deletion will be skipped during stack’s deletion. Therefore, stacks-b stack proceeded without any issues, and both tables were safely retained as orphaned resources.

Step 3: Import orphaned resources

With the original stacks deleted, the three DynamoDB tables were now orphaned resources but still fully functional and fargate service consuming those tables were working as expected.

- Prepared and save a JSON template (

DynamoTemplateToImport.json, link in reference section) to import existing resources (i.e. three dynamodb tables) into a new CF stack. - CloudFormation → Create stack: With existing resources (import resources) → follow on-screen instructions → select upload a template file (choose

DynamoTemplateToImport.jsonfile) → continue → On the Identify resources page, identify each target resource with an unique identifier (in this case dynamodb table name) → Import resource. - New CF Stack: We created a new CloudFormation stack named dynamo-imported-tables.

Considerations

- We had to rehearse the whole migration process few times in staging env.

- Had to ensure table deletion protection and PITR as we couldn’t afford to lose any data.

- Had to pay extra attention to make sure there’s no service interruption as both services and tables were in production.

- In staging env, we even setup a dummy service and connected the service with those tables to observe if there’s any hiccups or interruptions.

- We have contacted AWS technical support and domain experts to validate our migration process and to verify if there’s any other edge cases we should take into account.

- We have deleted lambda function during

stack-b'sremovalPolicy update. We noticed it took about ~41 minutes for the stack to be updated. Reason was ourlambda-fnwas attached to a VPC. So in order to delete the lambda, all the associated ENIs needed to be drained and cleand up. A details explanation can be found in stackoverflow reference.